Modern Software Engineering: Begin Book Club

by Simon MacDonald

@macdonst

on

We had a great chat with Dave Farley, the author of Modern Software Engineering. Unfortunately, we ran into a bit of an issue with OBS as the end of the meeting and missed our wrap up section. The most important thing to note is that Dave has a new book called Continuous Delivery Pipelines which is available over at LeanPub. I’ve already bought my copy, and I look forward to cracking it open soon.

Video

Transcript

[00:00:00] Simon MacDonald: Well, welcome everybody to the, checks notes, June edition of the book club. If you’re anything like me, you’re still having issues, trying to figure out what day and month and possibly even year it is. It’s wonderful. But yeah. Thank you for joining us all. I just want to mention, before we get started, that everything is covered under the Architect code of conduct.

[00:00:17] You can read that at github.com/architect. Basically, the short version is just, don’t be jerks. Just try to be nice to everybody. Treat people the way that you would like to be treated. So today we have with us Dave Farley, he’s the managing director and founder of Continuous Delivery Ltd.

[00:00:32] He’s an absolute pioneer in continuous delivery, a thought leader, an expert practitioner in continuous delivery, DevOps, TDD software design, and has a long track record of creating high-performance teams and shaping organizations for success. So Dave is committed to sharing his experiences and techniques with software developers all around the world, helping them design quality, reliable systems of software.

[00:00:56] And he shares this expertise through his consultancy, as well as his YouTube channel and training courses. If you don’t follow Dave Farley, you should get a lot of great nuggets from the little YouTube clips that he posts up on there. Dave is the co-author of the bestselling Continuous Delivery book, and that describes a coherent, foundational and durable approach to effective software development.

[00:01:15] And he’s also the author of Modern Software Engineering, which is the book that we’re here to talk about today. So, Dave, thank you so much for joining us. Hopefully that introduction was not terrible.

[00:01:25] Dave Farley: No, it’s, it’s a pleasure. And thank you for asking me if you can’t see me, I’m sorry. If you can see me, I’m even sorry.

[00:01:35] Simon MacDonald: Excellent. Yeah, and folks you know, this is an opportunity to ask Dave questions. I’ll do my best to moderate things, to keep things moving along. If you’re shy and you don’t want to unmute yourself or unmute your video to ask a question, just please send me a direct message on discord I’m at macdonst or, you know, just hit me up in the voice text channel.

[00:01:57] So I mean, honestly, I need to ask a question and I think it’s probably more of a kind of issue where it’s like the two sides of the Atlantic ocean, just like looking at things differently. But when I was reading your book, I kept on coming across the term a big ball of mud. And I would probably describe exactly those same scenarios as a bowl of spaghetti.

[00:02:20] And is, is that just me or.

[00:02:23] Dave Farley: I wasn’t quite sure it was the difference between the different sides of the Atlantic, but it might be, it’s a commonly used phrase in my group of people that I communicate with to describe in nasty SIS tangled system. So yeah, you could certainly call it a big bowl of spaghetti.

[00:02:43] And that would certainly I’ve heard that term before. But it’s something that I’ve heard referred to, I think on both sides of the Atlantic, but anyway, the idea of a big, well, the idea of a big bowl of mud is architecture by accident. It’s where many complicated systems end up where everybody’s frightened to change them.

[00:03:04] And, we work to try and avoid that kind of destiny really is what a significant part of what my book is about.

[00:03:14] Simon MacDonald: Yeah, I really love that term. And it, I think it was the first time that I have seen it used. But what I like about it over the bowl spaghetti metaphor is that the bowl of spaghetti, you can kind of see the different threads and know where to pull at them in order to untangle things with, with a big ball of mud, it’s very opaque and you have to get into it to figure out what’s going on.

[00:03:35] I thought that gave an extra layer of description of the problems that we run into in software. I’m going to basically, I’m just going to steal that idea from you. Thanks for that.

[00:03:46] Dave Farley: It’s a pleasure. It was eight or 10 where I got it originally, but it’s certainly, it’s certainly not one of my, my inventions.

[00:03:55] Brian Leroux: So I’ve got a, I’ve got a question, Simon, if I can jump in, are we still doing the dramatic ratings? Is that a tradition that we’re letting fall by the wayside?

[00:04:05] Simon MacDonald: I don’t know if Dave has had a chance to prepare anything. So I didn’t want to put him on the spot, but Dave, Brian has now, so we’ll just roll with it.

[00:04:14] Brian Leroux: You don’t have to do it though,

[00:04:15] Dave Farley: That’s fine. I, if you would like me to read some of the book, I can certainly do that.

[00:04:26] Brian Leroux: Yeah, that would be awesome. And I think the most important part actually happens really early on where you provide a definition for modern software engineering. We throw this term around a lot and I don’t think it means what other people think it means.

[00:04:40] And I would really love it if the industry could come together behind your definition.

[00:04:45] Dave Farley: So let’s read the first chapter. What is software engineering? Let’s start there. So, okay. If you’re happy for me to go and read that to you, how long would you like me to read for you?

[00:05:03] Brian Leroux: So, often we sort of jokingly always ask the author to do a dramatic reading.

[00:05:07] I would say just a dramatic reading of the definition. Part of the story would be, it would be great, but also something we can pick at, cause there’s a, there’s a lot to unpack Just in trying to define a term.

[00:05:18] Dave Farley: So here’s a part from chapter one. What is software engineering? Our working definition for software engineering that underpins the ideas in the book is that software engineering is the application of an empirical scientific approach to finding efficient economic solutions, to practical problems in software adoption of an engineering approach to software development is an important for two main reasons.

[00:05:44] First software development is always an exercise in discovery and learning. And second, if our aim is to be efficient and economic in our ability to learn must be sustainable. This means that we must manage the complexity of the systems that we create in ways that maintain our ability to learn new things and adapt to them.

[00:06:08] So we must become experts at learning and experts at managing complexity. There are five techniques that form the roots of this focus on learning specifically to become experts at learning. We need the following duration feedback, incrementalism, experimentation, and empiricism is an evolutionary approach to the creation of complex systems, complex systems.

[00:06:37] Don’t spring, fully formed from our imaginations. They’re the product of many small steps where we try our ideas and react to success and failure along the way. These are the tools that allow us to accomplish that exploration and discovery, working this way, imposes constraints on how we can safely proceed.

[00:06:57] We need to be able to work in ways that facilitate the journey of exploration. That is the heart of every software project. So as well as having a laser focus on learning, we need to work in ways that allow us to make progress. And when the answers, and sometimes even the direction is uncertain for that, we need to become experts at managing complexity, whatever the nature of the problems that we solve, or the technologies that we use to solve them, addressing the complexity of the problems that faces and the solutions that we applied to them is central differentiator between bad systems and good to become experts at managing complexity.

[00:07:37] We need the following modularity cohesion, separation of concerns, obstruction and loose. It’s easy to look at these ideas and dismiss them as familiar. Yes, you’re almost certainly familiar with all of them. Aim at this book is to organize them and place them into a coherent approach to developing software systems helps you to take the best advantage of their potential.

[00:08:03] This book describes how to use these 10 ideas as tools to steer software development then goes on to describe a series of ideas that act as practical tools in their own right to drive any active strategy for any software development. These ideas include the following testability, deployability speed, controlling the variables and continuous.

[00:08:28] When we apply this thinking, the results are profound, we create software of higher quality. We produce work more quickly and the people working on the teams that adopt these principles report that they enjoy their work more, the less stress and have a better work-life balance is the extravagant claims.

[00:08:47] But again, they’re backed by data stuff at that point. That’s pretty great. I love it.

[00:08:54] Brian Leroux: There’s so much to pull on here that is worth talking about, I mean, one question, I guess I have, what’s taking so long as an industry. We, we get you, you mentioned this a bunch in the book, but like we get really worked up and hyped up about kind of the shiny aesthetic stuff, but we don’t put enough focus on the semantics and the outcomes and the goals of what we’re trying to build.

[00:09:23] Why do you think that is? And like, what can we be doing to what’s the antidote to this is type fever.

[00:09:30] Dave Farley: I, I think that, I think that there are two, two reasons. One of them is that the kind of people that career in software development appeals to rather obviously interested in the technology computers and what you can do with them.

[00:09:48] So interested in the tools in the same way, the carpenter’s probably interested in the source that they use or the plane that they use as their tools. Our problem is that then we get a bit distracted by that. The other aspect to this is that our industry kind of thrives on building and selling tools.

[00:10:11]** So there are software vendors. They are busy trying to sell us new things, to use new operating systems, new compilers, new languages, new ideas, whatever it might be new, you know, the latest framework for building web applications or whatever. At some level there’s a vested interest in pushing these things.

[00:10:36] It seems to me that almost none of those things are at the core of either what we do as a profession or what makes us good at it. I’ve had the privilege during the course of my career, where with some genuinely world-class software developers, I’ve worked on some interestingly difficult problems and worked with some great people at solving them.

[00:11:02] And at least two or three of those people, I think, are people. We would all tend to think of as you know, great software developers. And my observation is that great software developers will do a good job in a technology that they’ve never seen before might not be idiomatic. They might not be perfect, but there’s something that goes deeper than just the tools and the kinds of things that I was talking about.

[00:11:31]** They will work more experimentally. They will try out ideas. They will try to proceed in small steps that work hard to control the defect, the variables in what they do. They’ll try really hard to compartmentalize the system. The best software developer that I’ve worked with is a good friend of mine.

[00:11:46] His name is Martin Thompson, and he’s very widely regarded as a world-class expert in high-performance systems and concurrency. The, the, the JVM, the people that write the JVM re ask him for advice on how to do a better job. On some of the high performance stuff, he’s a great software developer.

[00:12:05] If you look at his code, it looks simple. That’s what makes him great. And it makes him great because he has a laser beam focus on ideas like separation of concerns, modularity cohesion, those kinds of ideas. And he uses obstruction and loosely coupled loose coupling to design systems that are just nice to work.

[00:12:26] And you can kind of approach his Cody say, oh yeah, I can see what this does almost instantly. Even if you don’t know, you know, what it does is just write such good code. And that seems that’s not related to an expertise in a language. You know, if he writes good code in a language that he’s less familiar with, those things still show through.

[00:12:46] And so I think there are these deeper principles that matter, which is what my book is about. But I think that there are these, these other things that you are alluding to tenders towards focusing on the wrong things. You know, if a carpenter became overly obsessive about, you know, I can’t build this chair without the right kind of sore, it’s not a very good carpenter, you know?

[00:13:10] So, think things about what it is that we believe is what really matters. There’s also a flip

[00:13:19] Brian Leroux: side to it too. And you get into this in the book where there’s a tension between good craft and good engineering. And there’s tons of, you know, we’ve all had worked with the, you know, the 10 X developer that’s able to pump out seemingly perfect code immediately without even a debugger.

[00:13:37] And that’s all great, but not everyone’s that person. What I kind of love about the theme of, of both this book and your previous work and other books like accelerate, is that the. These are outcomes that are available to all of us, and it’s not about craft or talent. That’s innate, it’s about principles and practices.

[00:13:55] And if you follow, you know, just this handful of practices from empiricism, to trunk based development or continuous delivery, then you get a good outcome on the other side. And if it feels like to me, like maybe, maybe it’s a maturity thing because the industry is just a little bit young still.

[00:14:16] Although some of us are getting pretty gray, a little bit tired with the treadmill. And yeah. Can you speak to that a little bit too, because there is this I, I feel like as most software developers, we strive towards craft. But craft might not actually be the answer.

[00:14:35] Dave Farley: Yes. And, I do talk about that in the book I think craft was a valuable and probably necessary step because it’s a better fit.

[00:14:47] So I think if you look at the history of the way that industry involved, as you say, we’re a relatively young industry, we, you know, we’ve been doing this professionally for probably well, 60 years there. And, you know, with explosive growth sort of from the 1980s onward. So maybe, you know, 40 years of, you know, real world girdling effort on yes.

[00:15:14] But, but, but Bob Martin points out that the average tenure of a software developer is five years. So most of us are relatively inexpensive. There isn’t a big order of very experienced people out there compared to the number of relatively inexperienced people. So we do an awful lot of forgetting the past.

[00:15:35] We’re not a good industry of learning things and learning from the past. If you talk to a bunch of physicists and they didn’t know who people like, you know, Feynman or Einstein or Pauli or Maxwell where you, you, you think they weren’t very good at their jobs. If we think about the experts in our discipline, if we, you know, think about people like Fred Brooks or people like that, then, you know, not everybody’s heard of those people. Not everybody knows what contributions they made. And often we kind of, so this sort of rediscovery goes on and on. And that’s, you know, that’s the kind of thing that you would expect from aircraft based industry, because there’s not a, there’s not a big body of agreed knowledge.

[00:16:20] We kind of assume that everybody learns themselves from first principles. What we do is much more complicated than that. There are some principles that we know to be true and important. And, what I was trying to do with this book really was to try and get some of those things that, you know, if you do these things, whatever the nature of your software, you’re going to do a better job.

[00:16:41]** So I was trying to get to that, you know, I was conscious of the outcome. I was striving for that, as I say, I’m a big believer in the importance of craft. And I think often when we talk about this, we confuse the idea of craft with creativity and we dismiss the creativity in engineering. And I would argue the reverse.

[00:17:06] I would argue that. If you look at other disciplines in human experience, then engineering, I would argue, is probably the peak of creativity. If you look at what Elon Musk and Space X are currently doing, building space rockets, it’s enormously creative, it’s within physical constraints. So it’s constrained by the physics of the problem to some degree, but, but engineering is different to that.

[00:17:32] So it’s working, they’re being inventive and creative. They’re trying out new ideas. That’s the kind of discipline that I aspire to for our four hours. That’s where I think we should be pitching is to try and get that kind of exploration, discovery, creativity. So I think that we should take on the craft movement and, take some of the advantages that they brought, that creativity, that focus.

[00:18:02] Learning and discovery and so on, but I think we should do it in a little bit more organized way that allows us to build on what we already know. We’re not very good at dismissing bad ideas from people that we’re trying to be soft craftsmen. That’s people who, you know, still do things like, you know, skip testing, don’t jump to continuous integration, you know, organized with waterfall development techniques and all of those kinds of things, which we know don’t work.

[00:18:30] So why, why, why are those people still doing that? That’s you had a funny

[00:18:35] Brian Leroux: comment in the book where you were like I’m going to Butch it. I don’t, I don’t quite remember how it went, but basically, you were saying Fred Brooks observed the 10 X idea, 10 X games, maybe not the impossible, but what he missed was that we could have 10 X losses, but just.

[00:18:52] Definitely laughed out loud when I read, because there’s been a few times where I’ve been caught up on the hype train and chosen technology based on what I heard rather than what I was trying to achieve. And you know, had spectacular failures on the other side chasing this sort of golden hammer or perfect idea that didn’t really exist.

[00:19:12] And it’s, it’s really hard because it’s hard to sell the idea that you don’t need something to a person who’s convinced themselves. They need it. And it’s also hard to predict the future because like you can, you know, the cynics are always going to be there, you know? So it’s very easy to be like, well, you’re just being cynical.

[00:19:31]** You know, I I’m, I know I’m going to have a great outcome on the other side of this technology then inevitably they don’t.

[00:19:40] Dave Farley: Yeah, absolutely. I, I. I think, I think often we look for success in the wrong places, which again is kind of what I’m trying to get to with, with the book. Is that it, I, I D I did, I did a video a few months ago on my YouTube channel about low code systems.

[00:20:01]** And one of the things that, that, that seems to me, that’s interesting about low code systems is that they don’t really solve the deeper problems. So, you know, if you’re building, if you’re building something simple, you’re building a simple website and you can kind of use something like SquareSpace or something like that, where you can kind of know, draw pictures of your website.

[00:20:25] And that builds the website. That’s fine to a level of complexity, but then what comes about what happens when you come to change it? What happens when you need to modify it? Well, what happens when it grows beyond a certain level of complexity where you can hold all the connections in your. And for my YouTube video, I came up with a little example.

[00:20:43] I’ll use some low code tools in my own business. And I had some, I had to use an integration tool that integrates my training site with my mail list. And this integration tool allowed me to kind of draw pretty pictures of joins between these things and kind of go through some wizards that gave me to fill in some details and send messages between these two systems.

[00:21:09] And I’ve got one of them wrong and optimist for about it was about 10 steps to kind of configure this system. And I’ve done this with five different integrations. So I tried again and I got it wrong a second time. So that’s, that’s now 50 different actions that I’ve carried out for each of the, you know, five times 10 for each of the different, different attempts.

[00:21:30] So that’s a hundred different attempts. It took me the third until the third attempt to get that integration. So for something as code, but high manual labor. Exactly. And that’s the problem? The problem wasn’t about typing that my ability to type, I reckon I’m a good enough program, or I could have, if I’d approach this as a programming problem, wrecking, it would have been quicker because I would have be more wary, more cautious.

[00:21:57] I would’ve written some tests and I would probably get to the solution faster if I just treated it as a programming problem. But I wasn’t in that mindset. I wasn’t using that because the tools led me somewhere else. And the real problem there is dealing with information and abstraction. That low code just doesn’t do it.

[00:22:17] Why are we that in programming and software development, we come up with all sorts of tools. Have we have things like version control systems and automated testing and deployment mechanisms? So we can step, you know, step back from a mistake. Low code mostly often, frequently, don’t give you those sorts of things.

[00:22:34] So it’s just an example, but there’s something deeper going on with software that the hard parts of software isn’t the typing. If you’re having trouble with the syntax of the language that you’re using, get a better idea and spend a bit more time to learn the language. That’s not the hard part. It’s the way that we carve up the problems.

[00:22:51]** It’s the way that we organize our thinking. It’s thinking about things about what, how to make things more modular or more loosely coupled with, with different parts of, we can change one part of the system without affecting another, without ending up with a big ball of mud that we started off talking about.

[00:23:08] So I think, I think those sorts are deeply at the heart of our discipline. I think he’s interesting, you know, it’s an interesting thing, but it’s much more, I’m a grumpy old man these days, but I’ve come to the conclusion that our discipline is much more at the level of just how we deal with.

[00:23:30] Yeah, our job is to use information technology, to solve practical problems in the real world for people. So it’s much more about how we handle the information than it is about the tools. The tools are a means to an end, not the end of itself. And yeah, you’ve mentioned this. Oh, sorry. Cut you off, please. I said, you mentioned this

[00:23:50] Brian Leroux: in the book and you, you got like a really succinct way of putting it in this where you say outcomes over mechanisms.

[00:23:57] And I actually think this is, you mentioned this in the book as well, but then another piece of this is I felt like the Accelerate book was like an unlock. Like I had found the manual to like, you know, maybe having some degree of sanity and in a world that’s gone insane. And a lot of Accelerate the book kind of just confirmed things.

[00:24:17] I already had implicitly known for years because of books like continuous delivery. But you mentioned in your book that it misses one key point. We can move fast. We can follow all these practices, but we still might be building the wrong thing. And that’s a much more subtle problem that I’m still, I’m still not entirely sure how we address, because the ultimate thing there is, it comes back to your ideas about empiricism and learning.

[00:24:43] We should be testing fundamental business ideas, not syntax, not new tools, not new databases, but like, what is the purpose of this software and how do

[00:24:54] Dave Farley: Do I achieve that purpose? Absolutely. We, we, we, we, we probably need to be testing both, but, but, but absolutely we need to be testing those things as well.

[00:25:02] I’ve, I’ve written this, there’s a book coming out, like to be CA, which is about adopting an SRE site, reliability engineering. And I was asked to write forward. So, I’ve had a preview of the book and I think it’s an important idea. And one of the things that it talks about. Kind of resonated with me in what you’re, what you’re describing is that is the SRE.

[00:25:26] We tend to think about it in technical terms, because, because of all the reasons that we’ve just been describing, we think about SRE in terms of, error budgets for things like that. It was just learned by living and all that kind of stuff. But it takes a tiny little step to think about it in the terms of what if every feature that we came up with, we decided what the, what the measurement was that we would determine its success and what value we expected to achieve.

[00:25:56] So we’d set ourselves. Service level objective and against a service level indicator of some kind. What if those are commercial goals or usability goals, as well as the technical goals of uptime and so on. And we’re into hypothesis driven development. We were into an engineering discipline where, you know, at the start we were working. Frankly, we’re going to predict what we think the outcome should be going to figure out how are we going to measure the outcome?

[00:26:24] And that’s kind of what I mean by talking to, you know, working experimentally, which is one of the key ideas in my engineering book. So if we approach every piece of work, every change to our production system is a little experiment. And we think about, so, so how, what would I need? You know what, what’s my prediction?

[00:26:44] You know what, what’s my hypothesis here? What, what, what doc, you know, what indicator on the dial? Am I hoping to meet. With this change. That’s my, that’s my indicator. So that’s my service level indicator. And what level on that, that indication would I count as success or what level below that threshold would I can’t is failure, even better experiment.

[00:27:10] Then I’m defining an experiment. That’s going to focus my mind on how do I control the variables. So I’ll be able to understand the results from my experiments that might be in the form of an automated test. It might be, you know, I’m going to measure latency and throughput for performance reasons, or it might be putting a change out into production and doing some form of AB testing between different user groups and seeing who likes what.

[00:27:35] But I think that kind of thinking ought to be at the root of our discipline. If we’re going to do a good job, that’s how we saw, you know, and that’s how we solve really hard problems. We don’t solve really hard problems by crossing our fingers and guests. I guess at the start, and then we changed that into an experiment, or

[00:27:55] Brian Leroux: maybe it’s just a series of guesses, but small ones hopefully

[00:27:59] Dave Farley: proving along the way.

[00:28:01]** Yeah, I

[00:28:02] Simon MacDonald: no, I was going to say that is one of the things that Dave addresses in the book is that we’re absolutely terrible at guessing what our users want. So we need to keep those chains that small and continuously iterate on them so that we can get the data that we need in order to figure out if we are fixing their issues.

[00:28:18] And I think there was a stat you listed where I think Microsoft found that only 30% of the changes that they made to the software were things that the users actually wanted. Like

[00:28:30] Dave Farley: mace. It was, it was even worse than that. So it was, so it was the numbers were that two thirds of features that Microsoft produced zero or negative value for Microsoft.

[00:28:47] That’s so brutal but not surprising. It’s then, then, then the only sane thing to do is to move quickly in small steps. So we can figure out which are the bad ideas and eliminate them.

[00:28:59] Brian Leroux: I mean, this speaks to a startup and startups looking for product market fit. They’ve got no choice. They’ve got this sort of existential drive, hanging over their heads to, to make these experiments and find the value.

[00:29:12] What about big companies? Like especially larger organizations, who’ve kind of calcified a bit. They get resistant to change. I remember a while ago at Adobe when I worked there, I was talking about our lead time and we had a slow Jenkins build for one of our servers and I wanted to get that down.

[00:29:30] So we’d get more iterations. And their leadership was like, well, why would you want to make that faster? You know, we’ll want to move like deliberately,

[00:29:40] Dave Farley: which I thought was.

[00:29:42] Brian Leroux: Wild at the time, but you know, also fairly prevalent like thinking.

[00:29:48] Dave Farley: Yeah. I, I think, I think you’re right and it is prevalent, but I also think that it’s deeply wrong.

[00:29:53] And I think one of the really interesting things w one of the, I think probably one of the most influential works of the last decade is these, the book that you mentioned earlier, which is accelerate and accelerate says all sorts of really, really interesting things. And the state of DevOps report that kind of backup, Accelerate with data.

[00:30:17] One of the things that it says is that there’s no trade off between speed and quality. If you want to do high quality work is essential. What you move fast. If you move slowly, all of the data says that you create lower quality work. So it’s not just that there’s no trade-off, it’s that this is a virtual circle, the re and the reasons when you stop and think about them, the reasons are fairly obvious.

[00:30:39] If we make changes in many small steps, then the risk associated with each small step is lower because the change is small and simple. If the challenge is small and simple, there are fewer places for mistakes to hide. So there’s less chance that we’re going to screw up in some big way. So if we’re releasing changes like an Amazon releases changes, once every 11 point something if we releasing change into production every 11.67 X, 11.2, I think it is, we are releasing changes to production every 11.2 seconds.

[00:31:09] We’re going to be good at releasing change. First of all, we’re going to make sure that we’re good at it. And each change is going to be small and easy to back away from if it’s bad. So that’s a good route. But one of the things that the Accelerate book says that I must confess made me laugh. The first time I read.

[00:31:30] It was that if you work in a regulated industry and you have a company, you have something like a change approval board that authorizes changes into production. That’s negatively correlated with quality, but you will produce, you will produce lower quality software. If you’ve got to change approval board, because it goes slower and going slower is negatively correlated with quality so they can measure that effect.

[00:31:55] And so the safest thing to do, which is completely counter-intuitive, but this is why evidence and experimentation and data matter. That’s the, the safest thing to do is to release change in small steps more quickly, see that what you said about big companies ending to become more resistant to change and to ossifying and all that sort of thing.

[00:32:20] I think that’s absolutely true that that’s what they do, but I think what’s really fascinating is that some of this kind of. Lean thinking it’s crossing backwards and forwards between manufacturing and software development. We are pushing it as software development people. We are pushing the boundaries of some of these engineering thinking, but so do manufacturers, if you look at Space X and Tesla, both of which are genuinely first class continuous delivery companies like that practice continuous delivery.

[00:32:58] I heard something recently, which was, when you stop and think about it, Space X is happy to update the software 40 minutes before a launch. This is sometimes on space rockets that have people on board. We’re updating the software because, because they’re doing continuous integration, they’re changing all things all the time.

[00:33:19] Their test passed that up. That. Yep. Thanks to the space rocket that’s two minutes before the launch Tesla recently decided that they would only modify the, the, the model three production line. So the Tesla factory is a software driven entity in its own. Right. So Tesla made a change that changed the maximum charge rate for the model three from 200 kilowatts to 250 kilowatts.

[00:33:49] This is a change that requires just rerouting a power distribution cable in the car. So modifying physically the design of the car and reconfiguring the factory to be able to make that modification. The change took three hours before they were producing cars with the new design, with an increased charge rate, and it was a software only change.

[00:34:13] So, yeah. Organize that some organizations as they get bigger, become more ossified. It’s not, that’s not because you must that’s because they do and they are less efficient as a result. They’ve always done it that way, or just

[00:34:31]** Brian Leroux: a bad habit. I think of Amazon too, as another example of a company that moves pretty fast for the size that they are at.

[00:34:38] And they’re also able to do it without any breaking changes, which is kind of unheard of even at the cloud level. They impressed me quite a bit with their release cadence. And it’s a similar thing where they, they will, they will just ship and Werner Vogels, the CTO of Amazon very famously will say things will fail.

[00:34:59] And if, if your plan is to have no failures, You’re going to fail at that too. You might as well plan for failure and make it as cheap and easy as possible. And like a muscle getting exercise. The more you do it, the better you’ll be at it, but less likely you will see failure. It is counterintuitive.

[00:35:18] Dave Farley: I think that’s an interesting thing.

[00:35:21]** And one of those things that we can learn from other engineering disciplines, I, one of my interests outside of software is I’m interested in aviation and flying. If you look at me, the evolution of, of airplanes, the engineers don’t get these right things, things right. First time it’s a permanent and continue or exercising, learning discovery and, you know, reinvention of our, of the solution.

[00:35:50] So, there was some fantastic data a few years ago in 2017. Commercial aviation flu, the equivalent of two-thirds of the population of the planet in that year. And in that year, not one person died as a result of a commercial aviation accident. That was the first time that happened.

[00:36:09] But that’s because, you know, there’d been over a hundred years of development of airplanes and airplanes crashed. And so that, you know, they learned from the crashes. One of the reasons why they retired at Concorde was that the engines were in the wrong place and there was a risk of fires. So all of these things, that’s how engineering proceeds.

[00:36:28] It proceeds by learning things from, from production, empirical learning discovery. Thinking about the ways that things can go wrong and then finding out that we didn’t get all of those guesses right. And finding out what actually went wrong and correcting those things as well. And it’s just this continual process of improvement and learning.

[00:36:46] And I think that’s another aspect to this where these small frequent changes in our ability to maintain that pace is so important. It makes

[00:36:56] Brian Leroux: systems more durable. And I find at least for myself anyways, there’s no, there’s no real situation where I’ve got a whole system in my head. Things have grown, you know, like the levels of abstraction have grown to a point where we, we are able to create really incredible software systems now in a fairly short amount of time.

[00:37:17] But, you know, remembering the nuance between today’s CloudFormation or tomorrow’s dynamo DB query, or next months, whatever is it’s, it’s almost impossible. So we have. Almost guardrails, you know, like I’ll make a change and I’ll deploy. Cause it doesn’t matter. It’ll go to staging and if it works cool, if it doesn’t, that’s cool.

[00:37:37] I learned something and no one got hurt and it’s, it’s going to be fine, but that’s a, that’s a safety net. That’s pretty recent. We didn’t used to work that

[00:37:47] Dave Farley: way. Indeed. And, and we use techniques like, you know, version control infrastructure as code automation as kind of adjuncts to our memory so that we can record a take on what works right now and replay that over and over again.

[00:38:04] And we don’t have to remember every time, you know, if you are stressed at work thinking too hard about all of the different steps necessary to then I can configure a Docker host on a cloud provider, which I was doing this afternoon. And. That’s time, that’s wasted from solving problems.

[00:38:25] That’s technical waste, really. So we’d like to try to minimize the degree to which we have to worry about those things. And we do have to worry about those things because they’re part of our job too, but it’s not where the real value is. You know, the real value is solving people’s problems with software.

[00:38:43] And so we want to try and maximize that while minimizing the, the first one, I, and techniques like automation and so on and pushing control are vital to those kinds of things. It seems to me,

[00:38:56] Brian Leroux: It’s funny because over the years I’ve noticed I haven’t been doing this as long as you, but I’ve been doing it for awhile.

[00:39:01]** And when I started, version control was still pretty new and shiny. We had just moved past a visual source, safe into this crazy new open source thing called SVN. And writing tests was a very racy proposition, you know, like, oh, I don’t know if we have time for tests nowadays. Yeah, that statement would be met with a raised eyebrow.

[00:39:21]** Today it feels like things like continuous integration and infrastructure as code, or just about accepted practice, but they’re still pretty, you know new, still pretty racy for people.

[00:39:36] Dave Farley: Yeah. I, I, I, I still have frequent debates with people where they, you know, they’re, they’re arguing the nuance of well automated testing, but also continuous integration and, you know, and, and really what they’re trying, what it seems to me is that they’re trying to use the true avoid doing it.

[00:39:56] Yeah.

[00:39:56] Brian Leroux: It’s funny. Cause it seems like it might save time to not do the thing, but in reality, without these guardrails things, you’re going to move way slower way

[00:40:05] Dave Farley: slower. Yes. I was talking about that with a group of people this afternoon, that again, referring to the Accelerate. What the evidence says is that if you practice continuous delivery and all of those, you know, the costs associated approaches to development, you spend 44% more time producing useful things in software, bridging new features than teams that don’t.

[00:40:26] So it’s a false economy to avoid these things and circling back to, you know, to, to do what we’re talking about. Those are the kinds of advantages I think you would expect to see if we had identified engineering practices for software. Yes. You live a better software faster. I, so I’ve got a, I’ve

[00:40:57] Brian Leroux: got a, is this

[00:40:58] Dave Farley: A trick question?

[00:40:59] Because I don’t even

[00:40:59] Brian Leroux: know

[00:40:59] Dave Farley: if I want to ask it, but you know, how do we get more

[00:41:03] Brian Leroux: people to do this? I cringe when I think about certification in our India. It’s become basically a tool for vendors to market and often cases, a tool for kind of grifting newer people to the industry. So I don’t love it. And, and yet it feels like maybe we’re ready for that.

[00:41:22] And ironically, I don’t even think a certification for software engineering would have a whole lot to do with technology. We would have more, more to do with

[00:41:31]** Dave Farley: these principles. Yes. I would agree with that. So that certainly in the back of my mind, when I was writing this book, he’s always thinking, oh, I would love for universities to pick this up as the kinds of material.

[00:41:49] Not for commercial reasons, not for my own self-interest, but I mean, you know, as a way of adding something to the profession, to pick the sorts of ideas up and teach them totally, totally, it needs to

[00:42:02] Brian Leroux: be a part of a curriculum somehow. And, and it should be something that we can say, yes, this person is. He is aware that testing is a good idea.

[00:42:11]** That’s

[00:42:12] Dave Farley: all I like. I like you. I’m wary of certification schemes because I don’t think, I think our industry has suffered from them. But also if you look at other professions, I know the profession, you know, if, if somebody, I honestly don’t know what I think about this, but if somebody, you know, breaks the rules of the profession, if a doctor breaks the rules or a lawyer breaks the rules, they’re rejected from the profession and they’re not allowed to practice.

[00:42:40] If somebody does an emissions, fakes, emissions results in us that they go to jail, but they could come out of jail when they finished their term and still write software for a living, nobody’s going to check. All right.

[00:42:54] Brian Leroux: And this stuff has

[00:42:55] Dave Farley: real context. It’s a good thing or a bad thing or a bad thing, but it’s different.

[00:43:01]** Brian Leroux: It seems that with the maturity of a discipline, it does trend towards some kind of certification with the good and the bad that comes with that. Yes. But it definitely feels like software could use it because we’ve got right now, you know, either vendor certification, which is mostly just trying to sell people stuff, or we’ve got, you know, the university level teaching, which is kind of more low-level history than it is actual practical.

[00:43:27] Dave Farley: Here’s how you build a thing and do it with a team is the other big key.

[00:43:33] Simon MacDonald: Yeah, I know that this idea has been floated before. I definitely read a book in the early two thousands about having a software engineering certificate program and not, you know, you’re a Java certified developer, or you’re a cloud certified developer.

[00:43:47] You are a software engineer. And I was just trying to look at my bookshelf there and I couldn’t find it, which means it’s probably downstairs at another bookshelf, but I’ll, I’ll follow up with that. But Dave, you were just gonna make a comment on

[00:43:59] Dave Farley: that. I was, I was, I was going to agree. I think that the other part of the puzzle in terms of wanting to bring this better discipline for one benefit is I think the employers need to take more responsibility for training people.

[00:44:16] I think that our industry, mercurial drivers of our industry, what nearly everybody wants is to employ somebody that’s using precisely the technology that they already have in precisely the way that so precisely the problem that they’re trying to address. What would be a better focus? What would be a better outcome would be if they employed people with talent and potential and helped to coach them to take best advantage of that diamonds in potential.

[00:44:43] As you know, I think that good recruitment is hiring people that are better than you and helping them to get out. We must be able to get better, and many organizations do that and struggle with that. I think so. I think organizations taking some responsibility for training people and allowing people to learn and develop their careers is important.

[00:45:06] And it, in the best places where I’ve worked.

[00:45:10] Simon MacDonald: Yeah, I always cringe. Whenever I see a job posting where it’s like five years experience in X technology what you really need to do is hire smart people. That means that they’re good at picking up things. Not necessarily that they’ve had five years writing, one particular framework or one particular language.

[00:45:28] Dave Farley: My anecdote will tell you my anecdote, sorry. I’m sorry. I was interrupted. My anecdote will tell you how long this has gone on. I saw an advert for a Java programmer with nine years, experience needed and Java had only, but this was in 2000 Java had been around for about 5 or 6 years at that point.

[00:45:50] It’s just crazy. For the book, I worked out that during the course of my career. I’ve programmed commercial systems in something like about 20 different languages. I didn’t bother looking at frameworks or operating systems. Well, there’s loads of those as well, but about 20 different programming languages during the course of my career, these things are much more ephemeral than the kinds of things that we’ve been talking about.

[00:46:13] These things change and things like modularity cohesion, separation concerns, dumped experimentation doesn’t and that’s the point really? You know, we should be training people to be good, as you say, good at learning to be able to build on their skills.

[00:46:31]** Simon MacDonald: Yeah. And that’s where I wanted to jump in with a question from one of the audience members here Alper had asked, you know, how can a grumpy old man avoid missing out on new techniques and processes that may be useful, you know, to see if they help with modularization loose coupling, etc.

[00:46:46] And he signs it as a grumpy old man, which I am as well. So co-signed

[00:46:51]** Dave Farley: No, it’s not as grumpy or as old as me, but that’s, I think, why you think that this model with engineering model helps. So, you know, one of the ways at the end of the book, I do a little exercise where I try and apply this kind of engineering, thinking to a topic that I know very little about.

[00:47:15] So I try to apply engineering thinking to see whether it hurts my ability to understand machine learning, building machine learning systems at the end of the book. And I think he does that. So, so. This is kind of a version of something that I adopted with building systems myself for a long time, which is to start evaluating things, not based on the shiny technology, but what they’re going to do for me, what job, what they’re going to fulfill and how they’re going to.

[00:47:43] So how are they going to improve the situation? So if technology doesn’t allow me to iterate quickly, experiment with changes and so on, then I’m going to roll it out. So if I can’t version control it, I’m not interested. If I can’t automate the deployment of it, I’m not, I’m not interested, whatever it does really.

[00:48:04] I’m not really interested because I can’t do the things that I need to do. If it’s, if it’s a programming idea, if it’s a. Is it going to improve the modularity of cohesion, cohesion, separation of concerns, obstruction in my programming. I’ll give you an example. My, one of my personal dislikes is not, I don’t like the async await mechanism that occurs in a bunch of languages, because I think that you build much simpler systems.

[00:48:32] If you just treat them as a full asynchronous system.

At this point we ran into problems with OBS but were very near wrapping up.

Next Month

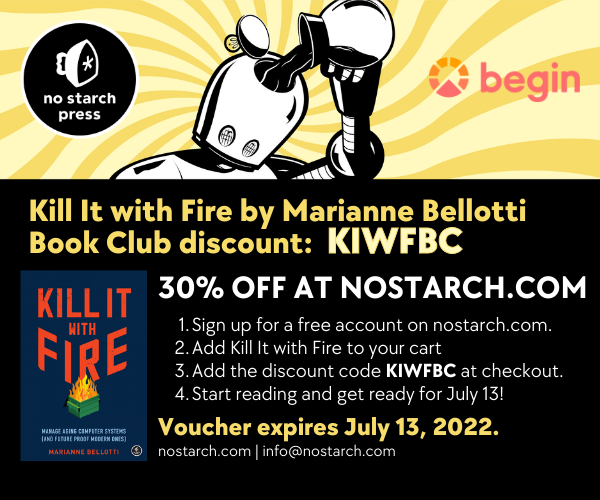

Join us for our July meetup where we will chat with Marianne Bellotti about her book Kill It with Fire. Thanks to our friends at No Starch Press for making a 30% off coupon available for our community to purchase the book before July 13th.

Don’t miss the next book club meeting

Stay in touch by:

- Joining the Architect Discord, where we will be hosting the book club video chat.

- Follow the @begin Twitter account, where we will send out polls for future book club selections.

Or if you prefer emails join the book club newsletter. We promise that we will only use this mailing list for book club purposes like meet-up reminders and book club selections. We do not sell your personal data.